Weekly Journal: AI Powered Wearables are Helping People with Disabilities

[6 min read] Your weekend guide to getting ahead on the digital frontier. Advancing wearable technology is empowering people with disabilities to perform daily tasks with more independence.

Welcome to this week’s Weekly Journal 📔, your guide to the latest news & innovation in emerging technology, digital assets, and our exciting path to the Metaverse. This is week 154 of the 520 weeks of newsletters I have committed to, a decade of documenting our physical and digital lives converge. New subscribers are encouraged to check out the history & purpose of this newsletter as well as the archive.

- Ryan

🌐 Digital Assets Market Update

To me, the Metaverse is the convergence of physical & virtual lives. As we work, play and socialise in virtual worlds, we need virtual currencies & assets. These have now reached mainstream finance as a defined asset class:

🔥🗺️Heat map shows the 7 day change in price (red down, green up) and block size is market cap.

Bitcoin is falling because investors are getting nervous about a possible AI bubble and are pulling money out of riskier assets. This has caused a wave of selling that pushed prices down even faster. With interest rate cuts looking less likely soon, people are less willing to hold assets that do not earn income, like Bitcoin.

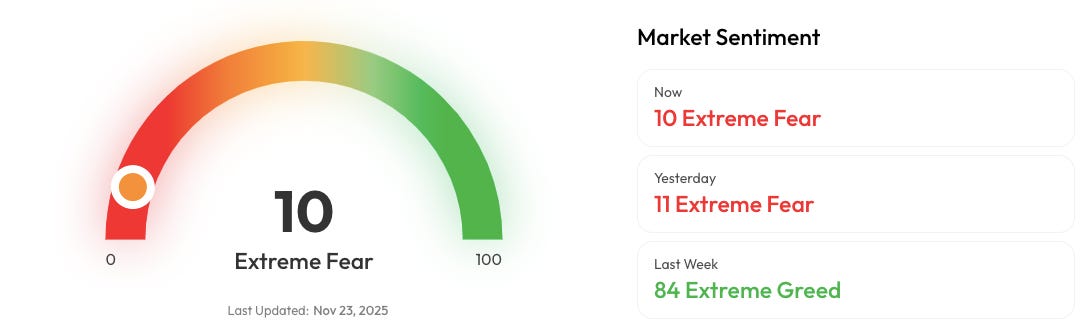

🎭 Crypto Fear and Greed Index is an insight into the underlying psychological forces that drive the market’s volatility. Sentiment reveals itself across various channels—from social media activity to Google search trends—and when analysed alongside market data, these signals provide meaningful insight into the prevailing investment climate. The Fear & Greed Index aggregates these inputs, assigning weighted value to each, and distils them into a single, unified score.

From 84 → 10 in a week! Market psychology is fascinating.

🗞️ Metaverse news from this week:

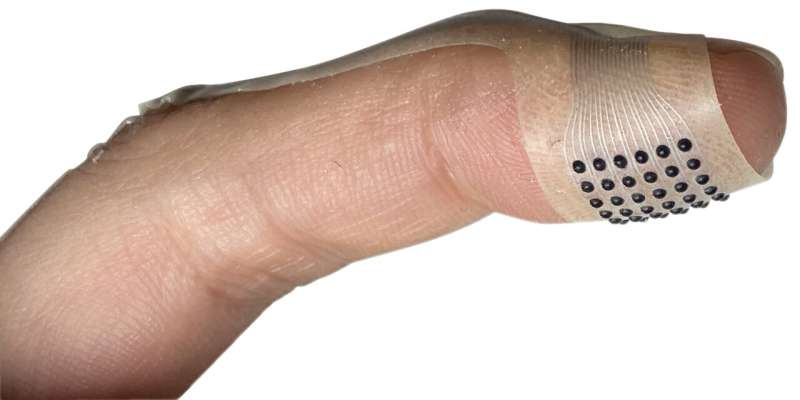

Fingertip Haptics Reach “Human Resolution”, Bringing Real Texture to Digital Worlds

Northwestern University engineers have unveiled VoxeLite, the first fingertip haptic device that matches the natural sensing abilities of human skin. The ultra-thin, flexible patch wraps around the finger and uses a dense grid of tiny electrostatic “nodes” that press into the skin with precise patterns. Each node acts like a pixel of touch, delivering detailed and rapid tactile cues.

This technology solves two long-standing challenges in haptics: spatial resolution (feeling fine textures) and temporal resolution (responding fast enough for realism). VoxeLite’s node array can move up to 800 times per second, covering the full range of human touch receptors.

In user tests, participants were able to recognise virtual textures and directional cues with high accuracy. The device can operate in active mode for creating synthetic textures on touchscreens, or passive mode where it becomes thin enough to blend into daily activity without blocking natural touch.

For the metaverse, VoxeLite represents a major step toward true digital tactility, allowing users to feel fabrics while shopping, sense materials in virtual environments, or experience richer interaction in accessible interfaces for people with vision impairments. It brings digital touch closer to the realism we already take for granted in sight and sound, and sets the stage for fully immersive mixed-reality experiences.

Quantum Breakthrough Unlocks New Control Over Graphene

Graphene is a remarkable “miracle” material, consisting of a single, atom-thin layer of tightly connected carbon atoms that remains both stable and highly conductive. These qualities make it valuable for many technologies, including flexible screens, sensitive detectors, high-performance batteries, and advanced solar cells.

Researchers from Göttingen and partner institutions have directly observed Floquet effects in graphene for the first time. This confirms that the material’s electronic properties can be light-engineered at ultrafast timescales, settling a long-standing scientific question. Using femtosecond momentum microscopy, the team showed that precise laser pulses can reshape graphene’s electronic states almost instantly. This proves that Floquet engineering works in metallic and semi-metallic materials, not only in insulators.

The discovery opens the door to tailoring quantum materials for specific uses in next-generation electronics, sensors, and computing. Scientists can now envision devices where electrons are guided and controlled with light, enabling designs that were previously impossible.

From a metaverse perspective, this breakthrough pushes forward the materials that will power future computing layers. Ultra-fast, light-responsive graphene could support faster processors, higher-density displays, and more efficient sensors, forming part of the hardware foundation for immersive, persistent digital worlds.

📖 Read of the week: What People Really Want from Social Media in the AR Metaverse

A new study out of UC Santa Cruz cuts through the hype and gives us something rare in the metaverse conversation: grounded evidence about what people actually feel comfortable doing in augmented reality. Researchers tested how users react to different mixes of privacy settings and content formats, from 2D video through to 3D overlays in shared public spaces. The result is a simple pattern that says a lot about where AR social platforms should head next.

People want AR on their own terms. Participants felt most at ease engaging with AR content in private spaces, not busy streets or cafés. They also preferred familiar formats: 2D video over 3D holograms, and dynamic movement over static images. In other words, the future may be spatial, but users still anchor themselves in formats they trust.

This matters for anyone building the metaverse layer that will sit over our physical world. Private-first design, optional rather than intrusive overlays, and a focus on comfort over novelty will guide which platforms gain traction. It also hints at something bigger: the metaverse will not arrive as a dramatic leap into 3D spaces. It will creep in through formats people already use, gradually layering richer experiences as comfort grows.

For builders, investors, and policymakers, this study is a reminder that adoption follows trust, not spectacle. And for anyone dreaming of persistent AR social worlds, it shows the path forward is steady, human, and rooted in design choices that respect the user’s space before anything else.

🎥 Watch of the week: Meta’s Hyperscape: Scan Your Room

The metaverse is almost here — and the Hyperscape hyperspace is hyper insane. Meta’s latest update lets you turn your actual room into a multiplayer VR hangout. It’s photorealistic, spatially accurate, and surprisingly easy to set up.

Up to 8 people can now step into your scanned space using a Quest 3/3S headset or the Meta Horizon app. Yes, that means friends chilling in your actual digital lounge.

How to try it:

Download Hyperscape (free) from the Meta Store

Scan your room (5–10 minutes)

Wait for processing (a few hours)

Reopen — and boom, you’re in

Whether you’re showing off your space, catching up with mates, or just marvelling at the tech — it’s genuinely mindblowing.

Why it matters:

These kinds of hyper-real, shared digital environments are exactly what the metaverse has promised. Hyperscape is one of the first mainstream tools delivering on that promise — and it’s free.

AI Spotlight 🎨🤖🎵✍🏼: Ray‑Ban Meta / Oakley Meta Glasses - AI for Accessibility

In the Metaverse, AI will be critical for creating intelligent virtual environments and avatars that can understand and respond to users with human-like cognition and natural interactions.

News from Meta Platforms reveals that its next-generation AI glasses are being marketed not just as a stylish consumer gadget, but as a serious assistive tool for people with disabilities. According to their November 2025 announcement, these glasses allow wearers to make phone calls, send texts, receive environment descriptions, translate speech, and capture photos and videos—hands-free—all via voice commands and built-in AI. About Facebook

Key Features & Use Cases

The devices support voice control for calls, messages and media capture, which is crucial for users with limited mobility.

They include an environment-description capability and a “Call a Volunteer” feature, powered by a collaboration with Be My Eyes, that connects blind or low-vision users with volunteers to help interpret surroundings.

Wearers such as a quadriplegic veteran described the ability to take photos of his baby for the first time as “incredible”.

Creators who are blind reported using the glasses to check camera settings and direct filming without needing assistance.

Other users with ADHD and autism said the glasses helped them stay present in the moment and avoid distraction by keeping their phones out of sight.

Why This Matters

These glasses illustrate a shift from AI for novelty to AI for empowerment, bridging the gap between consumer tech and meaningful accessibility.

In the emerging metaverse and spatial computing era, wearables like these could help ensure that digital worlds are inclusive, reachable and usable by people with varying abilities—not just the able-bodied.

Because the glasses capture photos and videos from the first-person point of view, they create new content-creation pathways for people who were previously restricted by mobility or vision.

By collaborating with disability-focused organizations and offering specialised training materials (e.g., for veterans with low vision), Meta signals a recognition that accessibility must be built in, not bolted on.

Caveats & Questions Ahead

Whilst powerful, wearables still rely on hardware cost, battery life, comfort and connectivity—all of which can limit accessibility in low-resource settings.

Privacy, data security and consent become especially sensitive when wearables capture continuous audio/video in real world settings.

How well the AI context-understanding will work “in the wild” for diverse accessibility needs (e.g., people with cognitive impairments) remains to be fully tested.

Will the metaverse built with such hardware be genuinely inclusive at scale, or will it remain a premium niche?

Bottom Line

Meta’s AI glasses are a strong step toward equalising access to digital and connected worlds. In a metaverse where virtual environments, avatars and immersive platforms are rapidly expanding, having hardware and AI that serve diverse abilities is vital. This is not just tech for “cool” use-cases—it’s tech with the potential to redefine participation.

That’s all for this week! If you have any organisations in mind that could benefit from keynotes about emerging technology, be sure to reach out. Public speaking is one of many services I offer.