Weekly Journal: Visual-first Wearables Heat Up

[6 min read] Your weekend guide to getting ahead on the digital frontier. AR startup Rokid has smashed through the $1M milestone on Kickstarter in less than a week with its new Rokid Glasses

Welcome to this week’s Weekly Journal 📔, your guide to the latest news & innovation in emerging technology, digital assets, and our exciting path to the Metaverse. This is week 144 of the 520 weeks of newsletters I have committed to, a decade of documenting our physical and digital lives converge. New subscribers are encouraged to check out the history & purpose of this newsletter as well as the archive.

- Ryan

🌐 Digital Assets Market Update

To me, the Metaverse is the convergence of physical & virtual lives. As we work, play and socialise in virtual worlds, we need virtual currencies & assets. These have now reached mainstream finance as a defined asset class:

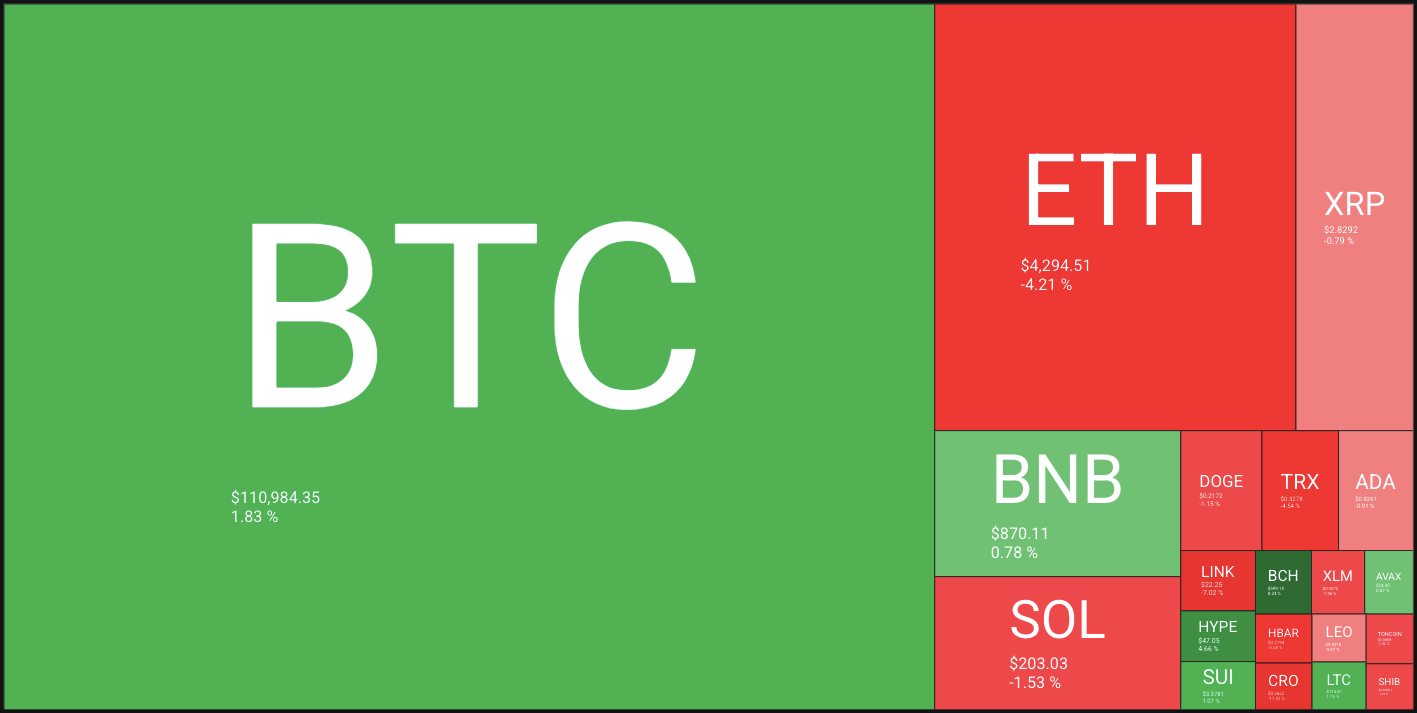

🔥🗺️Heat map shows the 7 day change in price (red down, green up) and block size is market cap.

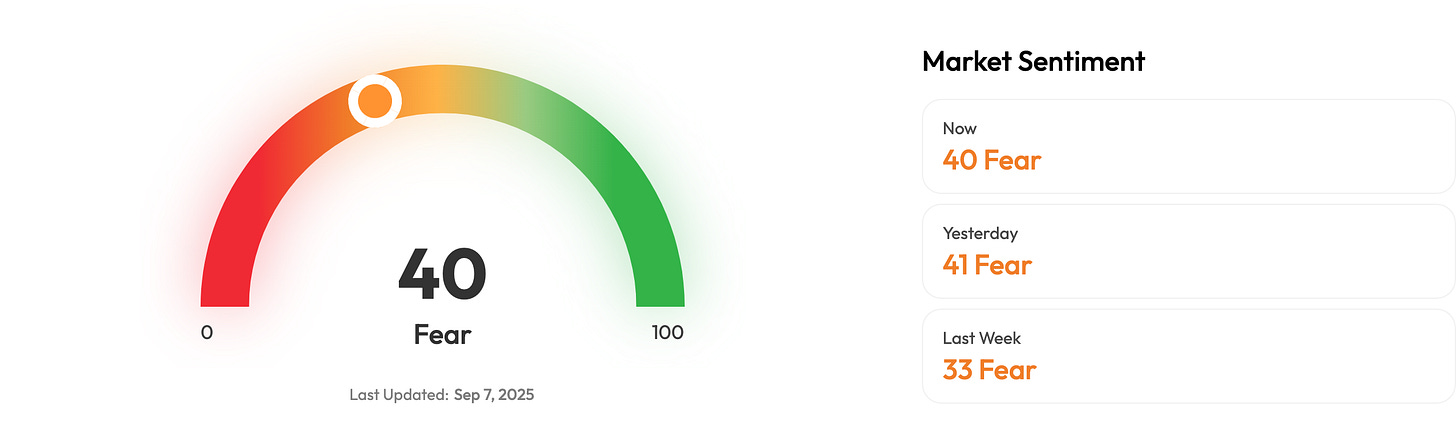

🎭 Crypto Fear and Greed Index is an insight into the underlying psychological forces that drive the market’s volatility. Sentiment reveals itself across various channels—from social media activity to Google search trends—and when analysed alongside market data, these signals provide meaningful insight into the prevailing investment climate. The Fear & Greed Index aggregates these inputs, assigning weighted value to each, and distils them into a single, unified score.

🗞️ Metaverse news from this week:

Rokid Glasses Hit $1M in First Week, Signalling Metaverse Appetite for Display-Driven Wearables

AR startup Rokid has smashed through the $1M milestone on Kickstarter in less than a week with its new Rokid Glasses—a lightweight smart glasses device featuring dual green micro-LED displays. The campaign, now sitting at over $1.2M USD, is slated to run until October 10th, suggesting demand for visual-first wearables is heating up far beyond the audio-only market occupied by Ray-Ban Meta and Oakley Meta HSTN.

Unlike smart glasses that act primarily as voice assistants, Rokid is betting on a screen-forward experience as a key gateway into the metaverse. Packing a Snapdragon AR1 chip, 12MP wide-FOV camera, and real-time AI translation tools (via ChatGPT and an internal LLM), the glasses blur the line between everyday convenience and immersive computing.

With competitors like Google and Meta developing display-equipped models, Rokid’s early success signals a shift in consumer expectations: the metaverse won’t just be built in headsets or VR rigs, but through normal-looking glasses that layer information and interaction seamlessly into the real world. As one of the fastest-funded AR wearables to date, Rokid’s momentum suggests that the metaverse’s next wave of adoption may start not with avatars in virtual plazas, but with AI-enhanced, display-driven eyewear worn on the street.

Switzerland Launches Apertus: Open-Source LLM Built for Transparency and Multilingual Worlds

Switzerland has unveiled Apertus, its first fully open-source large language model (LLM) trained exclusively on public data. Developed by EPFL, ETH Zurich, and the Swiss National Supercomputing Centre, Apertus comes in 8B and 70B parameter versions and supports over 1,000 languages—including Swiss German and Romansh.

Unlike most US and China-led models, Apertus releases everything openly: weights, architecture, datasets, and training methods. The goal is to provide a transparent, multilingual foundation for public research and European digital independence.

From a metaverse perspective, Apertus could be a pivotal enabler of pluralistic virtual worlds, where users interact in their native languages without depending on proprietary or geopolitically controlled AI. By making the stack openly available, Switzerland positions itself as a neutral hub for building the linguistic and cultural diversity that persistent digital spaces require.

As the metaverse matures, tools like Apertus may ensure it doesn’t become dominated by a handful of monolingual, closed AI ecosystems, but instead evolves as a polyglot, open, and decentralised digital commons.

Anthropic Pays $1.5B to Settle Landmark Author Piracy Lawsuit

AI firm Anthropic has agreed to pay $1.5 billion to settle a class-action lawsuit brought by authors who accused the company of stealing their books to train its Claude chatbot. The payout, pending approval by U.S. District Judge William Alsup, is the largest publicly reported copyright recovery in history.

The case, filed by authors including Andrea Bartz (We Were Never Here), Charles Graeber (The Good Nurse), and Kirk Wallace Johnson (The Feather Thief), alleged Anthropic maintained a library of more than seven million pirated books. Judge Alsup previously ruled that Anthropic’s training was “exceedingly transformative” under copyright law, but allowed the case to proceed over its reliance on pirated copies.

The settlement, which Anthropic says will “resolve the plaintiffs’ remaining legacy claims,” sets a historic precedent for how AI developers compensate creators whose work fuels model training. Plaintiffs’ lawyer Justin Nelson hailed it as “the first of its kind in the AI era,” noting it sends a message that “taking copyrighted works from pirate websites is wrong.” The deal may ripple across the AI industry, as OpenAI, Microsoft, and Meta face similar lawsuits. Analysts say it could accelerate new licensing frameworks, ensuring authors are paid when their work is used to train generative AI models.

Metaverse Lens: As AI becomes the backbone of immersive worlds, generative content, and digital avatars, this ruling highlights a central tension: the metaverse cannot thrive on stolen culture. Future growth will depend on systems that both compensate human creators and empower AI innovation, forging a fairer balance in the emerging digital economy.

📖 Read of the week:

I really enjoyed this guide on better structuring prompts:

My biggest take away was the The RGIO model, a fast way to structure your prompt when you need something clean and effective. It stands for:

R – Role: Who is the AI?

G – Goal: What’s the task and end result?

I – Input: What does the AI need to know?

O – Output: What format should the result take?

Example:

Prompt:

You are a senior product manager. Your goal is to create a PRD (product requirements document) for a new onboarding flow. Here’s the input: product brief and user research summary. Output: A PRD with sections for objective, user stories, KPIs, and timeline.Each element guides the AI logically, reducing ambiguity and making the result more usable right away.

Use it when: You have a specific outcome in mind and need to structure your request clearly and quickly.

🎥 Watch of the week:

New research has revealed that over 70% of us are at risk of losing our digital assets by failing to protect them in our wills. With digital assets becoming more common, estate law will need to catch up.

AI Spotlight 🎨🤖🎵✍🏼: Pocket Therapists in the Metaverse

In the Metaverse, AI will be critical for creating intelligent virtual environments and avatars that can understand and respond to users with human-like cognition and natural interactions.

In New Zealand and beyond, people are turning to AI chatbots as “pocket therapists” — always-on companions that help in moments of stress or loneliness. Otago student Sam Zaia’s Psychologist bot has racked up more than 200 million conversations, with users reporting they feel “heard” and “understood” in ways human support sometimes can’t match. Surveys show 41% of young Kiwis have tried AI for mental well-being, often when traditional care feels out of reach.

But in the metaverse, this trend takes on new weight. Imagine logging into a virtual world where your AI therapist sits beside you in a group session, joins you for a morning walk, or simply checks in as you explore. Researchers at Otago see this hybrid model — AI as a supportive companion between real therapy sessions — as safer than full automation, while still embedding help into daily digital life.

The risks remain stark: AI has already been linked to cases of self-harm, and its tendency to “please” users can dangerously affirm harmful thoughts. Yet for millions navigating virtual social spaces, AI companions may soon become part of the emotional infrastructure — a tool alongside journaling, exercise, and real therapists, shaping how mental health care is experienced in the metaverse.

That’s all for this week! If you have any organisations in mind that could benefit from keynotes about emerging technology, be sure to reach out. Public speaking is one of many services I offer.